What is decentralized AI?

In the swiftly evolving digital era, the emergence of decentralized AI represents a groundbreaking shift towards a more equitable, secure, and innovative technological future. This paradigm shift seeks to address the centralization concerns prevalent in the current AI landscape, characterized by a few dominant entities controlling vast amounts of data and computational resources. decentralized AI, by leveraging blockchain technology and decentralized networks, promises a new era where innovation, privacy, and access are democratized.

The last decade has witnessed unprecedented advancements in AI, propelled by breakthroughs in machine learning, particularly deep learning technologies. Entities like OpenAI and Google have been at the forefront, developing models that have significantly pushed the boundaries of what AI can achieve. Despite the success, this rapid advancement has led to a concentration of power and control, raising concerns over privacy, bias, and accessibility.

In response to these challenges, the concept of decentralized AI has gained momentum. decentralized AI aims to distribute the development and deployment of AI across numerous independent participants. This approach is not just a technical necessity but a philosophical stance against the monopolistic tendencies seen in the technology sector, especially within AI.

The necessity of decentralized AI

The integration of blockchain technology into AI frameworks introduces a layer of transparency, security, and integrity previously unattainable in centralized systems. By recording data exchanges and model interactions on a blockchain, decentralized AI ensures that AI operations are verifiable and tamper-proof, fostering trust among users and developers alike.

One of the cornerstone challenges decentralized AI addresses is data management. In a decentralized AI ecosystem, data ownership remains with the individuals, who can choose to contribute their data for AI training in a secure, anonymous manner. This not only enhances privacy but also encourages the development of more diverse and unbiased AI models.

Decentralized AI proposes a novel approach to computational resource allocation, where individuals can contribute their computing power to AI model training processes. This peer-to-peer network model ensures a more equitable distribution of computational resources, making AI development accessible to a broader audience. To motivate participation in the AI network, tokenization mechanisms are employed - this isn’t possible in the walled gardens of centralized AI incumbents. Before we dig deeper, here is a market map of the decentralized AI landscape, courtesy of Galaxy:

Contributors of data and computational resources are rewarded with tokens, which can be used within the ecosystem or traded, creating an economy around AI development and deployment. While decentralized AI presents a promising solution to many of the current AI ecosystem's flaws, it is not without challenges. Issues such as network scalability, consensus mechanisms for model training, and ensuring the quality of data in a distributed system are critical areas requiring further progress.

Despite these challenges, the potential of blockchain-enabled AI for fostering continued success and inclusivity is quite high. By lowering the barriers to entry for AI development and ensuring a more diverse data pool, decentralized AI could lead to the creation of more robust, ethical, and innovative AI solutions.

As AI continues to evolve, it will also necessitate a reevaluation of current policies and regulations surrounding AI and data privacy. A distributed landscape offers a unique set of challenges and opportunities for lawmakers, necessitating a balanced approach that fosters innovation whilst protecting individual rights. The future of AI is not just about technological advancement but also about reimagining the governance and economic models underpinning AI development. As we move forward, the focus will increasingly shift towards ensuring that AI serves the broader interests of society, promoting fairness, transparency, and inclusivity.

The journey towards a decentralized AI ecosystem is fraught with technical, ethical, and regulatory challenges. The promise of a more equitable, secure, and innovative future makes this a worthy endeavor. As we stand at the precipice of this new era in AI, it is crucial for stakeholders across the spectrum to collaborate, innovate, and navigate the complexities of decentralized AI, ensuring that the future of AI is democratized, and beneficial for all.

Verification mechanisms

One of the major benefits of decentralized AI involves the confirmation of outputs. When users typically interact with closed source LLMs, they aren’t able to properly verify the model they’re said to be using is actually that model. With decentralized AI, there are various approaches being taken to help eliminate this issue. Some believe optimistic fraud proofs (optimistic ML) are superior, while others believe in zero-knowledge proofs (zkML) - there isn’t a correct answer to this question yet.

Blockchains are designed to be immutable, cryptographically verifiable ledgers for peer-to-peer payments - the integration of blockchain technology into artificial intelligence systems is a massive opportunity for two growing industries to complement each other in a mutually beneficial way. If you’re of the belief that blockchains are here to stay and will integrate with traditional financial systems for the better, why not advocate for blockchains extending their utility into machine learning as well?

Optimistic machine learning involves verification but on the pretense of trust. All inferences made are assumed to be accurate, similar to optimistic rollups on Ethereum that trust a blockchain’s state is accurate unless proven otherwise. Various approaches are being taken by projects to implement this technology but most utilize a system with watchers and verifiers, where malicious outputs are spotted by watchers after verifiers have correctly proven the outputs to be correct. Optimistic machine learning is a favorable approach as in theory, it can work as long as a single watcher is being honest - but a single individual or actor can’t correctly check every output, and while the optimistic approach is cheaper than zero-knowledge proofs, the costs unfortunately scale linearly.

Zero-knowledge proofs are more efficient, as proof sizes do not scale linearly with model size. The mathematics behind zero-knowledge proving is quite complex, though has become more accessible thanks to projects like Starknet, zkSync, RiscZero and Axiom developing rollups and middleware technology to extend the benefits of zero-knowledge proving to blockchain technology. Given the nature of zero-knowledge proofs, they are essentially a flawless choice for verifying outputs - you cannot cheat the math behind them. Unfortunately for decentralized AI, using this technology at scale with large models is extremely expensive and not currently feasible. Despite this reality, many projects are building out infrastructure for the gradual integration of zero-knowledge technology and are making positive strides in this sector.

Data and infrastructure

LLMs are trained on immense amounts of data. There is a great deal of work that’s done between pre-training a model and getting it production ready for general use. The pre-training stage is one that involves gathering data from wherever available, tokenizing it to feed into a model and finally curating it to make sure the model learns from its now vast knowledge base. In the context of decentralized AI, blockchains are almost a perfect fit given the immutable catalogs of data available on-chain, in an unadjustable format. While this is true, blockchains are not yet capable of computing over that much data. Even the most performant blockchains in the space can do no more than 100 transactions per second, with more than that out of the equation for the foreseeable future.

There are numerous decentralized AI projects that reward users for their submission of data, with the aforementioned verification process being applied afterwards to ensure quality submissions. Integrating crypto economic reward mechanisms enables users to take part in the creation of large, open source models that can potentially one day compete with the centralized alternatives dominating the market. As it stands, very few teams have accumulated the scale of data available to centralized models - though this is alright, given just how nascent the sector of decentralized AI is.

In the context of decentralized AI there isn’t a standardized mission for all of these protocols, as they’re all targeting different approaches and competing as more protocols begin to launch and fight over compute. Teams are still deciding how to best incentivize their users, but also exploring what types of data will be most useful for them. Blockchains have so much data it would be quite tedious to sift through all of it, and many teams are building structured products and applications for non-AI crypto projects to more easily integrate. There are also a few projects that are aiming to corner the market on data or shape it into incredibly useful, streamlined products for end users - more on this in a later section. Regardless of its immediate applicability, there is a war for data occurring in the traditional world of machine learning and decentralized AI is no different.

Platforms for collaboration of AI and Crypto

While still quite early into its lifecycle, decentralized AI is already seeing platforms develop for entire decentralized AI ecosystems and product suites built with the future in mind. OpenAI is the best real world example of a successful AI lab that’s been able to develop additional products on top of brilliant models. In crypto there is still a large gap between innovation and usage, even outside of decentralized AI.

Teams building within the decentralized AI space are taking various approaches to platform building. Some are focusing on consumer platforms that utilize models and appealing UX, others are working on infrastructure for autonomous agents and others are building large networks with subnetworks for other models operating underneath. There isn’t an example of a winning approach or what will work in the long run, so different strategies are unfolding as more developers enter the space.

These will be looked at in the next sections, though we will first make a few distinctions. The idea of decentralized platforms is a little ambiguous - most of these protocols do have bold enough visions that their ideas might be misconstrued as platforms. For the sake of simplicity, we’ll define a decentralized AI platform as any product that’s built to stand on its own with an eventual ecosystem of additional apps built atop it. There are numerous GPU marketplaces being built in the decentralized AI sector, and while technically these are platforms, they are limited in scope and are usually built with different goals than the protocols we will discuss.

A closer look at the decentralized AI ecosystem

With that context, we can take a look at a handful of the most promising protocols across these various subsectors. There are many more that could be analyzed, though we chose a more curated list to try and provide as diverse of an example base as possible. For more information on decentralized AI, there are a few excellent reports written here and here.

Ritual, Ora, Truebit and Modulus

Starting with Ritual, this is a very unique protocol that’s been seeing significant interest from nearly every corner of the crypto industry. Founded by a team of experienced builders in the crypto and AI space, Ritual is envisioned as an open, modular, and sovereign execution layer for AI.

The core of Ritual is Infernet, a decentralized oracle network (DON) optimized for AI that is already live and operational. Infernet allows existing protocols and applications to seamlessly integrate AI models without friction, by exposing powerful interfaces for smart contracts to access these models for inference. This enables a wide range of use cases, from advanced analytics and predictions to generative AI applications running on-chain.

Beyond Infernet, Ritual is also building its own sovereign blockchain with a custom VM to service more advanced AI-native applications. This chain will leverage cutting-edge cryptography like zero-knowledge proofs to ensure scalability and provide strong guarantees around computational integrity, privacy, and censorship resistance - issues that plague the current centralized AI infrastructure. Ritual's grand vision is to become the "schelling point" for AI in the crypto space, allowing every protocol and application to leverage its powerful AI capabilities as a co-processor.

Ora is a crypto project that is pioneering the integration of artificial intelligence directly onto the Ethereum blockchain. Ora's central offering is the Onchain AI Oracle (OAO), which enables developers, protocols, and dApps to leverage powerful AI models like LlaMA2, Grok, and Stable Diffusion right on the Ethereum mainnet.

Ora's innovation lies in its use of optimistic machine learning to create verifiable proofs for complex ML computations that can be feasibly verified on-chain. This unlocks a new frontier of "trustless AI" - AI models and inferences that can be trustlessly integrated into blockchain applications, with robust cryptographic guarantees.Beyond just inference, Ora envisions a wide range of use cases for on-chain AI, from predictive analytics and asset pricing to advanced simulations, credit/risk analysis, and even AI-powered trading bots and governance systems.

The project has also introduced the "opp/ai" framework, which combines the benefits of opML and zero-knowledge ML (zkML) to provide both efficiency and privacy for on-chain AI use cases.Ora is building an open ecosystem around its Onchain AI Oracle, inviting developers to integrate their own AI models and experiment with the myriad possibilities of trustless AI on Ethereum. With its strong technical foundations and ambitious vision, Ora is poised to be a key player in the emerging intersection of crypto and artificial intelligence.

Truebit is a groundbreaking platform that aims to change the way developers build and deploy decentralized applications. Truebit offers a verified computing infrastructure, enabling the creation of certified, interoperable applications that can seamlessly integrate with any data source, interact across multiple blockchains, and execute complex off-chain computations.

The key innovation behind Truebit is its focus on "transparent computation". Rather than relying solely on on-chain execution, Truebit leverages a decentralized network of nodes to perform complex computations off-chain, while providing cryptographic proofs of their correctness. This approach allows developers to unlock the full potential of Web3 by offloading the majority of their application logic to the Truebit platform, without sacrificing the trustless guarantees and auditability that blockchain-based systems provide.

The Truebit Verification Game is the central mechanism that ensures the integrity of off-chain computations. Multiple Truebit nodes execute each task in parallel, and if they don't agree on the output, the Verification Game forces them to prove their work at the machine code level. Financial incentives and penalties are used to gamify the process, incentivizing correct behavior and penalizing malicious actors.

This transparency and verification process is the key to Truebit's ability to securely integrate decentralized applications with off-chain data sources and services, such as AI models, APIs, and serverless functions. By providing "transcripts" that document the complete execution of a computation task, Truebit ensures that the output can be verified and trusted, even when it originates from centralized web services.

Truebit's architecture includes a "Hub" that oversees the Verification Game, while also being policed by the decentralized nodes. This two-way accountability, inspired by techniques from the DARPA Red Balloon Challenge, helps to resist censorship and further strengthen the trustless guarantees of the platform.

Modulus provides a trusted off-chain compute platform that addresses a critical challenge faced by blockchain-based systems - the limitations of on-chain computation. Modulus utilizes a specialized zk prover that’s extendable to other projects across the industry, with Modulus having partnered with Worldcoin, Ion Protocol, UpShot and more. Modulus also offers its very own API on top of other machine learning APIs, letting them horizontally scale as the industry grows and begins to adopt more AI processes into existing applications.

By leveraging cutting-edge cryptographic techniques, Modulus enables developers to seamlessly integrate complex off-chain computations, such as machine learning models, APIs, and other resource-intensive tasks, into their dApps. This is achieved through Modulus' decentralized network of nodes that execute these computations in a verifiable and trustless manner, providing the necessary cryptographic proofs to ensure the integrity of the results.

With Modulus, developers can focus on building innovative dApps without worrying about the complexities and constraints of on-chain execution. The platform's seamless integration with popular blockchain networks, such as Ethereum, and its support for a wide range of programming languages and computational tasks, make it a powerful tool for building the next generation of decentralized applications.

Grass, Ocean and Giza

Grass is a revolutionary decentralized network that is redefining the foundations of artificial intelligence development. At its core, Grass serves as the "data layer" for the AI ecosystem, providing a crucial infrastructure for accessing and preparing the vast troves of data necessary to train cutting-edge AI models.

The key feature driving Grass to data acceleration is its decentralized network of nodes, where users can contribute their unused internet bandwidth to enable the gathering of data from the public web. This data, which would otherwise be inaccessible or prohibitively expensive for many AI labs to obtain, is then made available to researchers and developers through Grass's platform. By incentivizing ordinary people to participate in the network, Grass is democratizing access to one of the most essential raw materials of AI - data!

Beyond just data acquisition, Grass is also expanding its capabilities to address the critical task of data preparation. Grass wishes to become the definitive data layer, not only for crypto, but eventually for all of AI. It’s difficult to imagine a world where a blockchain project uproots an entire industry, though traditional finance is already slowly becoming more interested in the idea of immutable blockchain tech - why not the AI industry as well? Grass might be able to cut down on costs and incentivize users to become the premier marketplace for data, not just the premier crypto marketplace for data.

Through its in-house vertical, Socrates, Grass is developing automated tools to structure and clean the unstructured data gathered from the web, making it AI-ready. This end-to-end data provisioning service is a game-changer, as data preparation is often the most time-consuming and labor-intensive aspect of building AI models.

The decentralized nature of Grass is particularly important in the context of AI development. Many large tech companies and websites have a vested interest in controlling and restricting access to their data, often blocking the IP addresses of known data centers. Grass's distributed network circumvents these barriers, ensuring that the public web remains accessible and its data available for training the next generation of AI models.

Ocean Protocol is a pioneering decentralized data exchange protocol that is attempting to disrupt the way artificial intelligence and data are accessed and monetized. At the heart of Ocean's mission is a fundamental goal - to level the playing field and empower individuals, businesses, and researchers to unlock the value of their data and AI models, while preserving privacy and control.

The core of Ocean's technology stack is centered around two key features - Data NFTs and Datatokens, and Compute-to-Data. Data NFTs and Datatokens enable token-gated access control, allowing data owners to create and monetize their data assets through the use of decentralized data wallets, data DAOs, and more. This infrastructure provides a seamless on-ramp and off-ramp for data assets into the world of decentralized finance.

Complementing this is Ocean's Compute-to-Data technology, which enables the secure buying and selling of private data while preserving the data owner's privacy. By allowing computations to be performed directly on the data premises, without the data ever leaving its source, Compute-to-Data addresses a critical challenge in the data economy - how to monetize sensitive data without compromising user privacy.

Ocean has cultivated a thriving ecosystem of builders, data scientists, OCEAN token holders, and ambassadors. This lively community is actively exploring the myriad use cases for Ocean's technology, from building token-gated AI applications and decentralized data marketplaces to participating in data challenges and leveraging Ocean's tools for their data-driven research and predictions.

By empowering individuals and organizations to take control of their data and monetize their AI models, Ocean is paving the way for a more equitable and decentralized future in the realm of artificial intelligence and data-driven progress.

Giza is on a mission to revolutionize the integration of machine learning (ML) with blockchain technology. Leading the way for Giza is its Actions SDK, a powerful Python-based toolkit designed to streamline the process of embedding verifiable ML capabilities into crypto workflows.

The technology powering the Actions SDK is the recognition of the significant challenges that have traditionally hindered the seamless fusion of AI and crypto. Giza's team identified key barriers, such as the lack of ML expertise within the blockchain ecosystem, the fragmentation and quality issues of data access in crypto and the reluctance of protocols to undergo extensive changes to accommodate ML solutions.

The Actions SDK addresses these challenges head-on, providing developers with a user-friendly and versatile platform to create and manage "Actions" – the fundamental units of functionality within the Giza ecosystem. These Actions serve as the orchestrators, coordinating the various tasks and model interactions required to build trust-minimized and scalable ML solutions for crypto protocols.

At the core of the Actions SDK are the modular "Tasks," which allow developers to break down complex operations into smaller, manageable segments. By structuring workflows through these discrete Tasks, the SDK ensures clarity, flexibility, and efficiency in the development process. Additionally, the SDK's "Models" component simplifies the deployment and execution of verifiable ML models, making the integration of cutting-edge AI capabilities accessible to a wide range of crypto applications.

Morpheus, MyShell and Autonolas

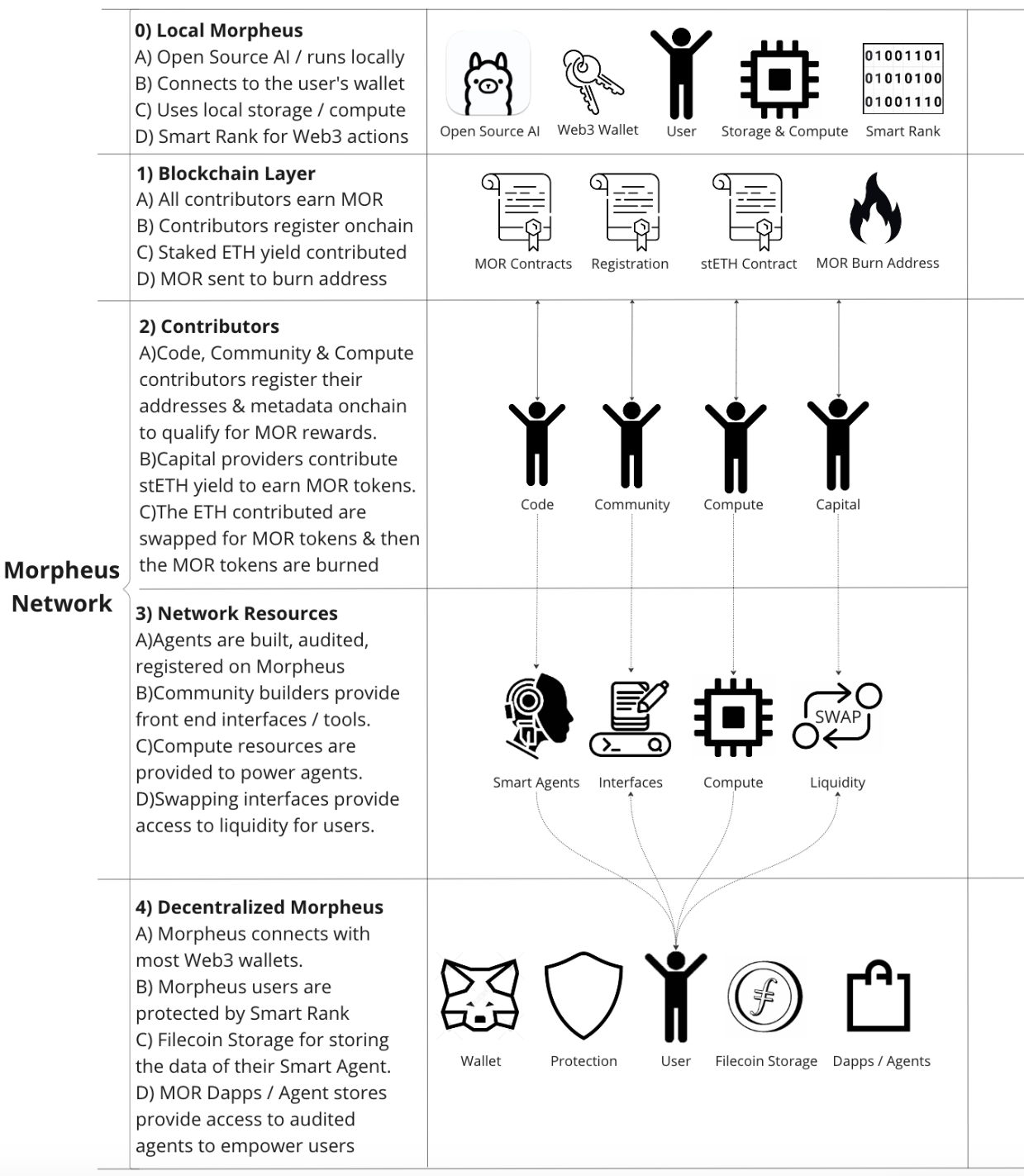

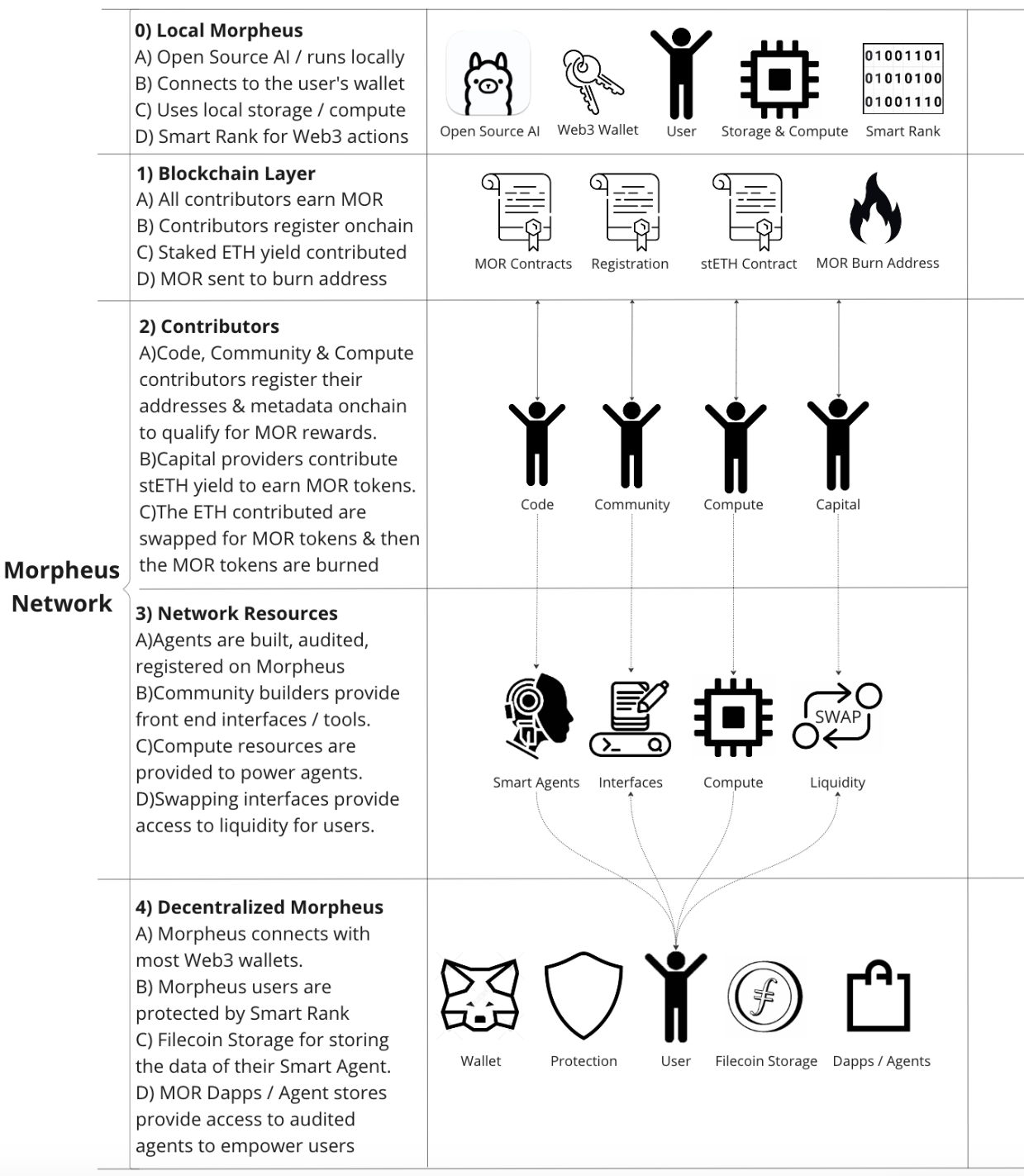

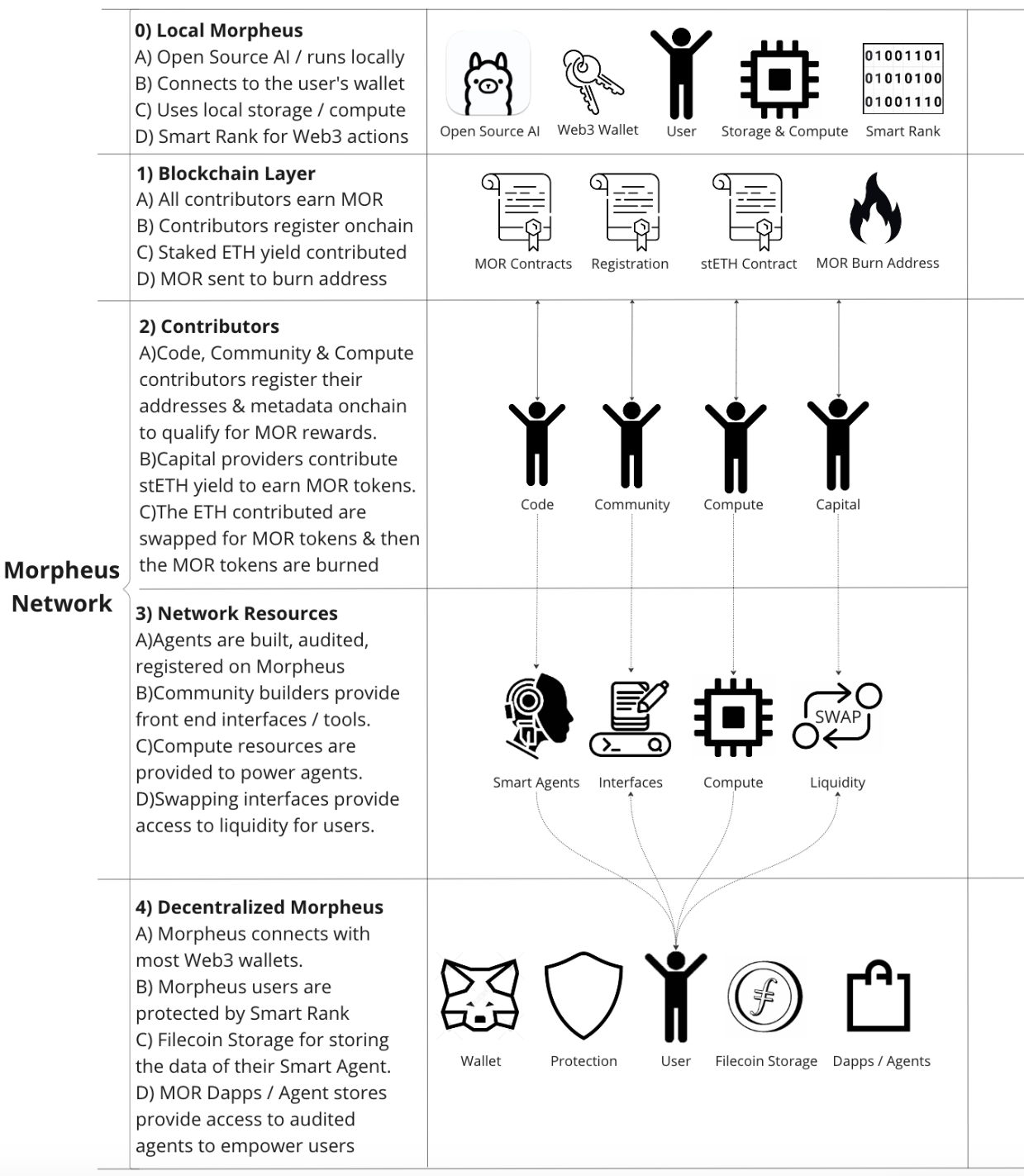

Morpheus represents a significant advancement in the field of artificial intelligence, specifically tailored for the crypto environment. Launched on September 2nd, 2023, Morpheus introduces an innovative framework for deploying personal general-purpose AI agents, known as Smart Agents, across a peer-to-peer network. The primary objective of Morpheus is to facilitate the interaction of users with decentralized applications , digital wallets, and Smart Contracts, thereby making the technology more accessible to a broader audience. The project's Yellow Paper provides a comprehensive technical overview, highlighting its ambition to bridge the gap between complex crypto technologies and everyday users through an open-source approach.

Central to Morpheus's architecture is its incorporation of several key technologies: a crypto native wallet for secure key management and transaction signing, a large language model trained on extensive data (including blockchains, wallets, DApps, DAOs and Smart Contracts), and a SmartContractRank algorithm designed to evaluate and recommend the most suitable Smart Contracts to users. Additionally, Morpheus leverages a sophisticated storage solution for the long-term retention of user data and connected applications, ensuring that Smart Agents can draw upon a comprehensive context for their operations.

Morpheus's economic model revolves around the MOR token, which plays a crucial role in incentivizing various stakeholders within the ecosystem, including developers, users, and providers of computing resources. The distribution of MOR tokens is carefully structured to promote a balanced and fair contribution from all participants, mirroring the decentralized ethos of the project. By avoiding a pre-mine and ensuring a fair launch, Morpheus wants to cultivate a genuinely decentralized infrastructure for its network, reminiscent of the foundational principles seen in Bitcoin and Ethereum.

The platform seeks to address the limitations of current large language models, which are predominantly proprietary, operate within closed ecosystems, and often monetize user data. Morpheus's open-source LLM offers a stark contrast, enabling direct interaction with the Web3 ecosystem without the encumbrances of licensing fees or restrictive regulatory frameworks. This approach not only empowers users with more control over their digital interactions but also paves the way for a new paradigm in AI-driven services within the Web3 domain.

On the other side of the spectrum is MyShell, a crypto platform utilizing its own LLM for creators and users to access high quality, customizable AI agent chatbots.

Born from a vision that contemplates the current limitations and potential of AI, MyShell seeks to democratize AI technology by promoting open-source models, stimulating ecosystem-wide innovation through ecological incentives, ensuring fair value redistribution, and providing users and developers the freedom to choose from various AI models and computing resources. This approach looking to break the existing monopolies and dependencies in the AI sector, fostering a landscape where creativity and contribution are rewarded, and access to AI technology is broadened.

MyShell's efforts to realize this vision lie in its development platform, which is both open and compatible, facilitating the integration and utilization of diverse models and external APIs. This inclusivity enables creators of varying skill levels to either delve into native workflows or leverage a modular interface for simpler, more intuitive development processes. MyShell advocates for the creation and distribution of AI applications through an app store designed to assist creators in publishing, managing, and promoting their AI solutions. This ecosystem is underpinned by a fair reward distribution mechanism that transparently compensates all contributors, including model providers, app developers, users, and investors, ensuring that every contribution is valued and rewarded appropriately.

A cornerstone of the MyShell platform is its MyShell LLM that excels in engaging users in empathetic and interactive experiences, particularly in AI character companionship and role-play, drawing from a rich database of content from novels, movies, anime, and TV shows. Such capabilities make MyShell's LLM distinct in providing human-like interactions, enriching the platform's content and user experience.

Advancements in text-to-speech and voice cloning technologies on MyShell have significantly lowered the barriers to creating personalized and realistic audio experiences. By reducing the cost by over 99% and requiring only a 1-minute voice sample for effective voice cloning, MyShell has democratized access to these technologies. This breakthrough represents a leap forward in making AI-native content more accessible, engaging, and personalized than ever before.

While MyShell doesn’t have a token yet, they’ve mentioned in documentation that the SHELL token will eventually possess in-app functionality and current users will be distributed SHELL across a unique season-based reward model. MyShell’s traditional counterpart is Character.AI which offers very similar features in a web2 package. With the presence of crypto incentives, MyShell is looking to facilitate a more democratic approach to content generation, along with more verifiability given the presence of blockchain tech.

The next platform is Autonolas, a very unique and expansive protocol building infrastructure, economies and marketplaces for autonomous agents on the blockchain. Autonolas is attempting to to create autonomous services that operate beyond blockchain limitations, featuring continuous running, self-actions, complex logic, and robust, decentralized architecture.

It consists of the Open Autonomy framework, Autonolas Protocol, and toolkits for creating these services. Open Autonomy facilitates building agent services with command line tools and base packages, while the Autonolas Protocol manages smart contracts for service coordination and incentives. The platform encourages open-source development, rewarding contributions with NFTs representing code utility, fostering a decentralized ecosystem owned by a DAO.

Autonolas has been working hard to expand its ecosystem and product offerings cross-chain, with recent advances to bridge over to Optimism and Base to take advantage of L2 scaling solutions. Additionally, the platform has been working to further boost out its suite of products to incorporate oracles, messaging, relayers and more automation. Autonolas is still fairly new, but progress has been promising and the team has been very active on their blog.

Closing thoughts

It’s easy to feel overwhelmed with everything happening in the crypto space, but this phenomena feels accelerated when it comes to decentralized AI. It’s important to remember that all of this is very new and still has a long way to go until it’s reached full maturity. All of these projects are promising and seeing large interest across the industry, but the modern age of AI on its own is still quite young in itself - the success of ChatGPT doesn’t automatically provide crypto with an easy way to succeed in its own route.

Hopefully this report provided you with a better analysis of the decentralized AI space and what teams are looking at when designing their systems. For more information, please consult the hyperlinks throughout the report to dive deeper into the ecosystems described. Thank you.

Disclaimer: This research report is exactly that — a research report. It is not intended to serve as financial advice, nor should you blindly assume that any of the information is accurate without confirming through your own research. Bitcoin, cryptocurrencies, and other digital assets are incredibly risky and nothing in this report should be considered an endorsement to buy or sell any asset. Never invest more than you are willing to lose and understand the risk that you are taking. Do your own research. All information in this report is for educational purposes only and should not be the basis for any investment decisions that you make.

What is decentralized AI?

In the swiftly evolving digital era, the emergence of decentralized AI represents a groundbreaking shift towards a more equitable, secure, and innovative technological future. This paradigm shift seeks to address the centralization concerns prevalent in the current AI landscape, characterized by a few dominant entities controlling vast amounts of data and computational resources. decentralized AI, by leveraging blockchain technology and decentralized networks, promises a new era where innovation, privacy, and access are democratized.

The last decade has witnessed unprecedented advancements in AI, propelled by breakthroughs in machine learning, particularly deep learning technologies. Entities like OpenAI and Google have been at the forefront, developing models that have significantly pushed the boundaries of what AI can achieve. Despite the success, this rapid advancement has led to a concentration of power and control, raising concerns over privacy, bias, and accessibility.

In response to these challenges, the concept of decentralized AI has gained momentum. decentralized AI aims to distribute the development and deployment of AI across numerous independent participants. This approach is not just a technical necessity but a philosophical stance against the monopolistic tendencies seen in the technology sector, especially within AI.

The necessity of decentralized AI

The integration of blockchain technology into AI frameworks introduces a layer of transparency, security, and integrity previously unattainable in centralized systems. By recording data exchanges and model interactions on a blockchain, decentralized AI ensures that AI operations are verifiable and tamper-proof, fostering trust among users and developers alike.

One of the cornerstone challenges decentralized AI addresses is data management. In a decentralized AI ecosystem, data ownership remains with the individuals, who can choose to contribute their data for AI training in a secure, anonymous manner. This not only enhances privacy but also encourages the development of more diverse and unbiased AI models.

Decentralized AI proposes a novel approach to computational resource allocation, where individuals can contribute their computing power to AI model training processes. This peer-to-peer network model ensures a more equitable distribution of computational resources, making AI development accessible to a broader audience. To motivate participation in the AI network, tokenization mechanisms are employed - this isn’t possible in the walled gardens of centralized AI incumbents. Before we dig deeper, here is a market map of the decentralized AI landscape, courtesy of Galaxy:

Contributors of data and computational resources are rewarded with tokens, which can be used within the ecosystem or traded, creating an economy around AI development and deployment. While decentralized AI presents a promising solution to many of the current AI ecosystem's flaws, it is not without challenges. Issues such as network scalability, consensus mechanisms for model training, and ensuring the quality of data in a distributed system are critical areas requiring further progress.

Despite these challenges, the potential of blockchain-enabled AI for fostering continued success and inclusivity is quite high. By lowering the barriers to entry for AI development and ensuring a more diverse data pool, decentralized AI could lead to the creation of more robust, ethical, and innovative AI solutions.

As AI continues to evolve, it will also necessitate a reevaluation of current policies and regulations surrounding AI and data privacy. A distributed landscape offers a unique set of challenges and opportunities for lawmakers, necessitating a balanced approach that fosters innovation whilst protecting individual rights. The future of AI is not just about technological advancement but also about reimagining the governance and economic models underpinning AI development. As we move forward, the focus will increasingly shift towards ensuring that AI serves the broader interests of society, promoting fairness, transparency, and inclusivity.

The journey towards a decentralized AI ecosystem is fraught with technical, ethical, and regulatory challenges. The promise of a more equitable, secure, and innovative future makes this a worthy endeavor. As we stand at the precipice of this new era in AI, it is crucial for stakeholders across the spectrum to collaborate, innovate, and navigate the complexities of decentralized AI, ensuring that the future of AI is democratized, and beneficial for all.

Verification mechanisms

One of the major benefits of decentralized AI involves the confirmation of outputs. When users typically interact with closed source LLMs, they aren’t able to properly verify the model they’re said to be using is actually that model. With decentralized AI, there are various approaches being taken to help eliminate this issue. Some believe optimistic fraud proofs (optimistic ML) are superior, while others believe in zero-knowledge proofs (zkML) - there isn’t a correct answer to this question yet.

Blockchains are designed to be immutable, cryptographically verifiable ledgers for peer-to-peer payments - the integration of blockchain technology into artificial intelligence systems is a massive opportunity for two growing industries to complement each other in a mutually beneficial way. If you’re of the belief that blockchains are here to stay and will integrate with traditional financial systems for the better, why not advocate for blockchains extending their utility into machine learning as well?

Optimistic machine learning involves verification but on the pretense of trust. All inferences made are assumed to be accurate, similar to optimistic rollups on Ethereum that trust a blockchain’s state is accurate unless proven otherwise. Various approaches are being taken by projects to implement this technology but most utilize a system with watchers and verifiers, where malicious outputs are spotted by watchers after verifiers have correctly proven the outputs to be correct. Optimistic machine learning is a favorable approach as in theory, it can work as long as a single watcher is being honest - but a single individual or actor can’t correctly check every output, and while the optimistic approach is cheaper than zero-knowledge proofs, the costs unfortunately scale linearly.

Zero-knowledge proofs are more efficient, as proof sizes do not scale linearly with model size. The mathematics behind zero-knowledge proving is quite complex, though has become more accessible thanks to projects like Starknet, zkSync, RiscZero and Axiom developing rollups and middleware technology to extend the benefits of zero-knowledge proving to blockchain technology. Given the nature of zero-knowledge proofs, they are essentially a flawless choice for verifying outputs - you cannot cheat the math behind them. Unfortunately for decentralized AI, using this technology at scale with large models is extremely expensive and not currently feasible. Despite this reality, many projects are building out infrastructure for the gradual integration of zero-knowledge technology and are making positive strides in this sector.

Data and infrastructure

LLMs are trained on immense amounts of data. There is a great deal of work that’s done between pre-training a model and getting it production ready for general use. The pre-training stage is one that involves gathering data from wherever available, tokenizing it to feed into a model and finally curating it to make sure the model learns from its now vast knowledge base. In the context of decentralized AI, blockchains are almost a perfect fit given the immutable catalogs of data available on-chain, in an unadjustable format. While this is true, blockchains are not yet capable of computing over that much data. Even the most performant blockchains in the space can do no more than 100 transactions per second, with more than that out of the equation for the foreseeable future.

There are numerous decentralized AI projects that reward users for their submission of data, with the aforementioned verification process being applied afterwards to ensure quality submissions. Integrating crypto economic reward mechanisms enables users to take part in the creation of large, open source models that can potentially one day compete with the centralized alternatives dominating the market. As it stands, very few teams have accumulated the scale of data available to centralized models - though this is alright, given just how nascent the sector of decentralized AI is.

In the context of decentralized AI there isn’t a standardized mission for all of these protocols, as they’re all targeting different approaches and competing as more protocols begin to launch and fight over compute. Teams are still deciding how to best incentivize their users, but also exploring what types of data will be most useful for them. Blockchains have so much data it would be quite tedious to sift through all of it, and many teams are building structured products and applications for non-AI crypto projects to more easily integrate. There are also a few projects that are aiming to corner the market on data or shape it into incredibly useful, streamlined products for end users - more on this in a later section. Regardless of its immediate applicability, there is a war for data occurring in the traditional world of machine learning and decentralized AI is no different.

Platforms for collaboration of AI and Crypto

While still quite early into its lifecycle, decentralized AI is already seeing platforms develop for entire decentralized AI ecosystems and product suites built with the future in mind. OpenAI is the best real world example of a successful AI lab that’s been able to develop additional products on top of brilliant models. In crypto there is still a large gap between innovation and usage, even outside of decentralized AI.

Teams building within the decentralized AI space are taking various approaches to platform building. Some are focusing on consumer platforms that utilize models and appealing UX, others are working on infrastructure for autonomous agents and others are building large networks with subnetworks for other models operating underneath. There isn’t an example of a winning approach or what will work in the long run, so different strategies are unfolding as more developers enter the space.

These will be looked at in the next sections, though we will first make a few distinctions. The idea of decentralized platforms is a little ambiguous - most of these protocols do have bold enough visions that their ideas might be misconstrued as platforms. For the sake of simplicity, we’ll define a decentralized AI platform as any product that’s built to stand on its own with an eventual ecosystem of additional apps built atop it. There are numerous GPU marketplaces being built in the decentralized AI sector, and while technically these are platforms, they are limited in scope and are usually built with different goals than the protocols we will discuss.

A closer look at the decentralized AI ecosystem

With that context, we can take a look at a handful of the most promising protocols across these various subsectors. There are many more that could be analyzed, though we chose a more curated list to try and provide as diverse of an example base as possible. For more information on decentralized AI, there are a few excellent reports written here and here.

Ritual, Ora, Truebit and Modulus

Starting with Ritual, this is a very unique protocol that’s been seeing significant interest from nearly every corner of the crypto industry. Founded by a team of experienced builders in the crypto and AI space, Ritual is envisioned as an open, modular, and sovereign execution layer for AI.

The core of Ritual is Infernet, a decentralized oracle network (DON) optimized for AI that is already live and operational. Infernet allows existing protocols and applications to seamlessly integrate AI models without friction, by exposing powerful interfaces for smart contracts to access these models for inference. This enables a wide range of use cases, from advanced analytics and predictions to generative AI applications running on-chain.

Beyond Infernet, Ritual is also building its own sovereign blockchain with a custom VM to service more advanced AI-native applications. This chain will leverage cutting-edge cryptography like zero-knowledge proofs to ensure scalability and provide strong guarantees around computational integrity, privacy, and censorship resistance - issues that plague the current centralized AI infrastructure. Ritual's grand vision is to become the "schelling point" for AI in the crypto space, allowing every protocol and application to leverage its powerful AI capabilities as a co-processor.

Ora is a crypto project that is pioneering the integration of artificial intelligence directly onto the Ethereum blockchain. Ora's central offering is the Onchain AI Oracle (OAO), which enables developers, protocols, and dApps to leverage powerful AI models like LlaMA2, Grok, and Stable Diffusion right on the Ethereum mainnet.

Ora's innovation lies in its use of optimistic machine learning to create verifiable proofs for complex ML computations that can be feasibly verified on-chain. This unlocks a new frontier of "trustless AI" - AI models and inferences that can be trustlessly integrated into blockchain applications, with robust cryptographic guarantees.Beyond just inference, Ora envisions a wide range of use cases for on-chain AI, from predictive analytics and asset pricing to advanced simulations, credit/risk analysis, and even AI-powered trading bots and governance systems.

The project has also introduced the "opp/ai" framework, which combines the benefits of opML and zero-knowledge ML (zkML) to provide both efficiency and privacy for on-chain AI use cases.Ora is building an open ecosystem around its Onchain AI Oracle, inviting developers to integrate their own AI models and experiment with the myriad possibilities of trustless AI on Ethereum. With its strong technical foundations and ambitious vision, Ora is poised to be a key player in the emerging intersection of crypto and artificial intelligence.

Truebit is a groundbreaking platform that aims to change the way developers build and deploy decentralized applications. Truebit offers a verified computing infrastructure, enabling the creation of certified, interoperable applications that can seamlessly integrate with any data source, interact across multiple blockchains, and execute complex off-chain computations.

The key innovation behind Truebit is its focus on "transparent computation". Rather than relying solely on on-chain execution, Truebit leverages a decentralized network of nodes to perform complex computations off-chain, while providing cryptographic proofs of their correctness. This approach allows developers to unlock the full potential of Web3 by offloading the majority of their application logic to the Truebit platform, without sacrificing the trustless guarantees and auditability that blockchain-based systems provide.

The Truebit Verification Game is the central mechanism that ensures the integrity of off-chain computations. Multiple Truebit nodes execute each task in parallel, and if they don't agree on the output, the Verification Game forces them to prove their work at the machine code level. Financial incentives and penalties are used to gamify the process, incentivizing correct behavior and penalizing malicious actors.

This transparency and verification process is the key to Truebit's ability to securely integrate decentralized applications with off-chain data sources and services, such as AI models, APIs, and serverless functions. By providing "transcripts" that document the complete execution of a computation task, Truebit ensures that the output can be verified and trusted, even when it originates from centralized web services.

Truebit's architecture includes a "Hub" that oversees the Verification Game, while also being policed by the decentralized nodes. This two-way accountability, inspired by techniques from the DARPA Red Balloon Challenge, helps to resist censorship and further strengthen the trustless guarantees of the platform.

Modulus provides a trusted off-chain compute platform that addresses a critical challenge faced by blockchain-based systems - the limitations of on-chain computation. Modulus utilizes a specialized zk prover that’s extendable to other projects across the industry, with Modulus having partnered with Worldcoin, Ion Protocol, UpShot and more. Modulus also offers its very own API on top of other machine learning APIs, letting them horizontally scale as the industry grows and begins to adopt more AI processes into existing applications.

By leveraging cutting-edge cryptographic techniques, Modulus enables developers to seamlessly integrate complex off-chain computations, such as machine learning models, APIs, and other resource-intensive tasks, into their dApps. This is achieved through Modulus' decentralized network of nodes that execute these computations in a verifiable and trustless manner, providing the necessary cryptographic proofs to ensure the integrity of the results.

With Modulus, developers can focus on building innovative dApps without worrying about the complexities and constraints of on-chain execution. The platform's seamless integration with popular blockchain networks, such as Ethereum, and its support for a wide range of programming languages and computational tasks, make it a powerful tool for building the next generation of decentralized applications.

Grass, Ocean and Giza

Grass is a revolutionary decentralized network that is redefining the foundations of artificial intelligence development. At its core, Grass serves as the "data layer" for the AI ecosystem, providing a crucial infrastructure for accessing and preparing the vast troves of data necessary to train cutting-edge AI models.

The key feature driving Grass to data acceleration is its decentralized network of nodes, where users can contribute their unused internet bandwidth to enable the gathering of data from the public web. This data, which would otherwise be inaccessible or prohibitively expensive for many AI labs to obtain, is then made available to researchers and developers through Grass's platform. By incentivizing ordinary people to participate in the network, Grass is democratizing access to one of the most essential raw materials of AI - data!

Beyond just data acquisition, Grass is also expanding its capabilities to address the critical task of data preparation. Grass wishes to become the definitive data layer, not only for crypto, but eventually for all of AI. It’s difficult to imagine a world where a blockchain project uproots an entire industry, though traditional finance is already slowly becoming more interested in the idea of immutable blockchain tech - why not the AI industry as well? Grass might be able to cut down on costs and incentivize users to become the premier marketplace for data, not just the premier crypto marketplace for data.

Through its in-house vertical, Socrates, Grass is developing automated tools to structure and clean the unstructured data gathered from the web, making it AI-ready. This end-to-end data provisioning service is a game-changer, as data preparation is often the most time-consuming and labor-intensive aspect of building AI models.

The decentralized nature of Grass is particularly important in the context of AI development. Many large tech companies and websites have a vested interest in controlling and restricting access to their data, often blocking the IP addresses of known data centers. Grass's distributed network circumvents these barriers, ensuring that the public web remains accessible and its data available for training the next generation of AI models.

Ocean Protocol is a pioneering decentralized data exchange protocol that is attempting to disrupt the way artificial intelligence and data are accessed and monetized. At the heart of Ocean's mission is a fundamental goal - to level the playing field and empower individuals, businesses, and researchers to unlock the value of their data and AI models, while preserving privacy and control.

The core of Ocean's technology stack is centered around two key features - Data NFTs and Datatokens, and Compute-to-Data. Data NFTs and Datatokens enable token-gated access control, allowing data owners to create and monetize their data assets through the use of decentralized data wallets, data DAOs, and more. This infrastructure provides a seamless on-ramp and off-ramp for data assets into the world of decentralized finance.

Complementing this is Ocean's Compute-to-Data technology, which enables the secure buying and selling of private data while preserving the data owner's privacy. By allowing computations to be performed directly on the data premises, without the data ever leaving its source, Compute-to-Data addresses a critical challenge in the data economy - how to monetize sensitive data without compromising user privacy.

Ocean has cultivated a thriving ecosystem of builders, data scientists, OCEAN token holders, and ambassadors. This lively community is actively exploring the myriad use cases for Ocean's technology, from building token-gated AI applications and decentralized data marketplaces to participating in data challenges and leveraging Ocean's tools for their data-driven research and predictions.

By empowering individuals and organizations to take control of their data and monetize their AI models, Ocean is paving the way for a more equitable and decentralized future in the realm of artificial intelligence and data-driven progress.

Giza is on a mission to revolutionize the integration of machine learning (ML) with blockchain technology. Leading the way for Giza is its Actions SDK, a powerful Python-based toolkit designed to streamline the process of embedding verifiable ML capabilities into crypto workflows.

The technology powering the Actions SDK is the recognition of the significant challenges that have traditionally hindered the seamless fusion of AI and crypto. Giza's team identified key barriers, such as the lack of ML expertise within the blockchain ecosystem, the fragmentation and quality issues of data access in crypto and the reluctance of protocols to undergo extensive changes to accommodate ML solutions.

The Actions SDK addresses these challenges head-on, providing developers with a user-friendly and versatile platform to create and manage "Actions" – the fundamental units of functionality within the Giza ecosystem. These Actions serve as the orchestrators, coordinating the various tasks and model interactions required to build trust-minimized and scalable ML solutions for crypto protocols.

At the core of the Actions SDK are the modular "Tasks," which allow developers to break down complex operations into smaller, manageable segments. By structuring workflows through these discrete Tasks, the SDK ensures clarity, flexibility, and efficiency in the development process. Additionally, the SDK's "Models" component simplifies the deployment and execution of verifiable ML models, making the integration of cutting-edge AI capabilities accessible to a wide range of crypto applications.

Morpheus, MyShell and Autonolas

Morpheus represents a significant advancement in the field of artificial intelligence, specifically tailored for the crypto environment. Launched on September 2nd, 2023, Morpheus introduces an innovative framework for deploying personal general-purpose AI agents, known as Smart Agents, across a peer-to-peer network. The primary objective of Morpheus is to facilitate the interaction of users with decentralized applications , digital wallets, and Smart Contracts, thereby making the technology more accessible to a broader audience. The project's Yellow Paper provides a comprehensive technical overview, highlighting its ambition to bridge the gap between complex crypto technologies and everyday users through an open-source approach.

Central to Morpheus's architecture is its incorporation of several key technologies: a crypto native wallet for secure key management and transaction signing, a large language model trained on extensive data (including blockchains, wallets, DApps, DAOs and Smart Contracts), and a SmartContractRank algorithm designed to evaluate and recommend the most suitable Smart Contracts to users. Additionally, Morpheus leverages a sophisticated storage solution for the long-term retention of user data and connected applications, ensuring that Smart Agents can draw upon a comprehensive context for their operations.

Morpheus's economic model revolves around the MOR token, which plays a crucial role in incentivizing various stakeholders within the ecosystem, including developers, users, and providers of computing resources. The distribution of MOR tokens is carefully structured to promote a balanced and fair contribution from all participants, mirroring the decentralized ethos of the project. By avoiding a pre-mine and ensuring a fair launch, Morpheus wants to cultivate a genuinely decentralized infrastructure for its network, reminiscent of the foundational principles seen in Bitcoin and Ethereum.

The platform seeks to address the limitations of current large language models, which are predominantly proprietary, operate within closed ecosystems, and often monetize user data. Morpheus's open-source LLM offers a stark contrast, enabling direct interaction with the Web3 ecosystem without the encumbrances of licensing fees or restrictive regulatory frameworks. This approach not only empowers users with more control over their digital interactions but also paves the way for a new paradigm in AI-driven services within the Web3 domain.

On the other side of the spectrum is MyShell, a crypto platform utilizing its own LLM for creators and users to access high quality, customizable AI agent chatbots.

Born from a vision that contemplates the current limitations and potential of AI, MyShell seeks to democratize AI technology by promoting open-source models, stimulating ecosystem-wide innovation through ecological incentives, ensuring fair value redistribution, and providing users and developers the freedom to choose from various AI models and computing resources. This approach looking to break the existing monopolies and dependencies in the AI sector, fostering a landscape where creativity and contribution are rewarded, and access to AI technology is broadened.

MyShell's efforts to realize this vision lie in its development platform, which is both open and compatible, facilitating the integration and utilization of diverse models and external APIs. This inclusivity enables creators of varying skill levels to either delve into native workflows or leverage a modular interface for simpler, more intuitive development processes. MyShell advocates for the creation and distribution of AI applications through an app store designed to assist creators in publishing, managing, and promoting their AI solutions. This ecosystem is underpinned by a fair reward distribution mechanism that transparently compensates all contributors, including model providers, app developers, users, and investors, ensuring that every contribution is valued and rewarded appropriately.

A cornerstone of the MyShell platform is its MyShell LLM that excels in engaging users in empathetic and interactive experiences, particularly in AI character companionship and role-play, drawing from a rich database of content from novels, movies, anime, and TV shows. Such capabilities make MyShell's LLM distinct in providing human-like interactions, enriching the platform's content and user experience.

Advancements in text-to-speech and voice cloning technologies on MyShell have significantly lowered the barriers to creating personalized and realistic audio experiences. By reducing the cost by over 99% and requiring only a 1-minute voice sample for effective voice cloning, MyShell has democratized access to these technologies. This breakthrough represents a leap forward in making AI-native content more accessible, engaging, and personalized than ever before.

While MyShell doesn’t have a token yet, they’ve mentioned in documentation that the SHELL token will eventually possess in-app functionality and current users will be distributed SHELL across a unique season-based reward model. MyShell’s traditional counterpart is Character.AI which offers very similar features in a web2 package. With the presence of crypto incentives, MyShell is looking to facilitate a more democratic approach to content generation, along with more verifiability given the presence of blockchain tech.

The next platform is Autonolas, a very unique and expansive protocol building infrastructure, economies and marketplaces for autonomous agents on the blockchain. Autonolas is attempting to to create autonomous services that operate beyond blockchain limitations, featuring continuous running, self-actions, complex logic, and robust, decentralized architecture.

It consists of the Open Autonomy framework, Autonolas Protocol, and toolkits for creating these services. Open Autonomy facilitates building agent services with command line tools and base packages, while the Autonolas Protocol manages smart contracts for service coordination and incentives. The platform encourages open-source development, rewarding contributions with NFTs representing code utility, fostering a decentralized ecosystem owned by a DAO.

Autonolas has been working hard to expand its ecosystem and product offerings cross-chain, with recent advances to bridge over to Optimism and Base to take advantage of L2 scaling solutions. Additionally, the platform has been working to further boost out its suite of products to incorporate oracles, messaging, relayers and more automation. Autonolas is still fairly new, but progress has been promising and the team has been very active on their blog.

Closing thoughts

It’s easy to feel overwhelmed with everything happening in the crypto space, but this phenomena feels accelerated when it comes to decentralized AI. It’s important to remember that all of this is very new and still has a long way to go until it’s reached full maturity. All of these projects are promising and seeing large interest across the industry, but the modern age of AI on its own is still quite young in itself - the success of ChatGPT doesn’t automatically provide crypto with an easy way to succeed in its own route.

Hopefully this report provided you with a better analysis of the decentralized AI space and what teams are looking at when designing their systems. For more information, please consult the hyperlinks throughout the report to dive deeper into the ecosystems described. Thank you.

Disclaimer: This research report is exactly that — a research report. It is not intended to serve as financial advice, nor should you blindly assume that any of the information is accurate without confirming through your own research. Bitcoin, cryptocurrencies, and other digital assets are incredibly risky and nothing in this report should be considered an endorsement to buy or sell any asset. Never invest more than you are willing to lose and understand the risk that you are taking. Do your own research. All information in this report is for educational purposes only and should not be the basis for any investment decisions that you make.

What is decentralized AI?

In the swiftly evolving digital era, the emergence of decentralized AI represents a groundbreaking shift towards a more equitable, secure, and innovative technological future. This paradigm shift seeks to address the centralization concerns prevalent in the current AI landscape, characterized by a few dominant entities controlling vast amounts of data and computational resources. decentralized AI, by leveraging blockchain technology and decentralized networks, promises a new era where innovation, privacy, and access are democratized.

The last decade has witnessed unprecedented advancements in AI, propelled by breakthroughs in machine learning, particularly deep learning technologies. Entities like OpenAI and Google have been at the forefront, developing models that have significantly pushed the boundaries of what AI can achieve. Despite the success, this rapid advancement has led to a concentration of power and control, raising concerns over privacy, bias, and accessibility.

In response to these challenges, the concept of decentralized AI has gained momentum. decentralized AI aims to distribute the development and deployment of AI across numerous independent participants. This approach is not just a technical necessity but a philosophical stance against the monopolistic tendencies seen in the technology sector, especially within AI.

The necessity of decentralized AI

The integration of blockchain technology into AI frameworks introduces a layer of transparency, security, and integrity previously unattainable in centralized systems. By recording data exchanges and model interactions on a blockchain, decentralized AI ensures that AI operations are verifiable and tamper-proof, fostering trust among users and developers alike.

One of the cornerstone challenges decentralized AI addresses is data management. In a decentralized AI ecosystem, data ownership remains with the individuals, who can choose to contribute their data for AI training in a secure, anonymous manner. This not only enhances privacy but also encourages the development of more diverse and unbiased AI models.

Decentralized AI proposes a novel approach to computational resource allocation, where individuals can contribute their computing power to AI model training processes. This peer-to-peer network model ensures a more equitable distribution of computational resources, making AI development accessible to a broader audience. To motivate participation in the AI network, tokenization mechanisms are employed - this isn’t possible in the walled gardens of centralized AI incumbents. Before we dig deeper, here is a market map of the decentralized AI landscape, courtesy of Galaxy:

Contributors of data and computational resources are rewarded with tokens, which can be used within the ecosystem or traded, creating an economy around AI development and deployment. While decentralized AI presents a promising solution to many of the current AI ecosystem's flaws, it is not without challenges. Issues such as network scalability, consensus mechanisms for model training, and ensuring the quality of data in a distributed system are critical areas requiring further progress.

Despite these challenges, the potential of blockchain-enabled AI for fostering continued success and inclusivity is quite high. By lowering the barriers to entry for AI development and ensuring a more diverse data pool, decentralized AI could lead to the creation of more robust, ethical, and innovative AI solutions.

As AI continues to evolve, it will also necessitate a reevaluation of current policies and regulations surrounding AI and data privacy. A distributed landscape offers a unique set of challenges and opportunities for lawmakers, necessitating a balanced approach that fosters innovation whilst protecting individual rights. The future of AI is not just about technological advancement but also about reimagining the governance and economic models underpinning AI development. As we move forward, the focus will increasingly shift towards ensuring that AI serves the broader interests of society, promoting fairness, transparency, and inclusivity.

The journey towards a decentralized AI ecosystem is fraught with technical, ethical, and regulatory challenges. The promise of a more equitable, secure, and innovative future makes this a worthy endeavor. As we stand at the precipice of this new era in AI, it is crucial for stakeholders across the spectrum to collaborate, innovate, and navigate the complexities of decentralized AI, ensuring that the future of AI is democratized, and beneficial for all.

Verification mechanisms

One of the major benefits of decentralized AI involves the confirmation of outputs. When users typically interact with closed source LLMs, they aren’t able to properly verify the model they’re said to be using is actually that model. With decentralized AI, there are various approaches being taken to help eliminate this issue. Some believe optimistic fraud proofs (optimistic ML) are superior, while others believe in zero-knowledge proofs (zkML) - there isn’t a correct answer to this question yet.

Blockchains are designed to be immutable, cryptographically verifiable ledgers for peer-to-peer payments - the integration of blockchain technology into artificial intelligence systems is a massive opportunity for two growing industries to complement each other in a mutually beneficial way. If you’re of the belief that blockchains are here to stay and will integrate with traditional financial systems for the better, why not advocate for blockchains extending their utility into machine learning as well?

Optimistic machine learning involves verification but on the pretense of trust. All inferences made are assumed to be accurate, similar to optimistic rollups on Ethereum that trust a blockchain’s state is accurate unless proven otherwise. Various approaches are being taken by projects to implement this technology but most utilize a system with watchers and verifiers, where malicious outputs are spotted by watchers after verifiers have correctly proven the outputs to be correct. Optimistic machine learning is a favorable approach as in theory, it can work as long as a single watcher is being honest - but a single individual or actor can’t correctly check every output, and while the optimistic approach is cheaper than zero-knowledge proofs, the costs unfortunately scale linearly.

Zero-knowledge proofs are more efficient, as proof sizes do not scale linearly with model size. The mathematics behind zero-knowledge proving is quite complex, though has become more accessible thanks to projects like Starknet, zkSync, RiscZero and Axiom developing rollups and middleware technology to extend the benefits of zero-knowledge proving to blockchain technology. Given the nature of zero-knowledge proofs, they are essentially a flawless choice for verifying outputs - you cannot cheat the math behind them. Unfortunately for decentralized AI, using this technology at scale with large models is extremely expensive and not currently feasible. Despite this reality, many projects are building out infrastructure for the gradual integration of zero-knowledge technology and are making positive strides in this sector.

Data and infrastructure

LLMs are trained on immense amounts of data. There is a great deal of work that’s done between pre-training a model and getting it production ready for general use. The pre-training stage is one that involves gathering data from wherever available, tokenizing it to feed into a model and finally curating it to make sure the model learns from its now vast knowledge base. In the context of decentralized AI, blockchains are almost a perfect fit given the immutable catalogs of data available on-chain, in an unadjustable format. While this is true, blockchains are not yet capable of computing over that much data. Even the most performant blockchains in the space can do no more than 100 transactions per second, with more than that out of the equation for the foreseeable future.

There are numerous decentralized AI projects that reward users for their submission of data, with the aforementioned verification process being applied afterwards to ensure quality submissions. Integrating crypto economic reward mechanisms enables users to take part in the creation of large, open source models that can potentially one day compete with the centralized alternatives dominating the market. As it stands, very few teams have accumulated the scale of data available to centralized models - though this is alright, given just how nascent the sector of decentralized AI is.

In the context of decentralized AI there isn’t a standardized mission for all of these protocols, as they’re all targeting different approaches and competing as more protocols begin to launch and fight over compute. Teams are still deciding how to best incentivize their users, but also exploring what types of data will be most useful for them. Blockchains have so much data it would be quite tedious to sift through all of it, and many teams are building structured products and applications for non-AI crypto projects to more easily integrate. There are also a few projects that are aiming to corner the market on data or shape it into incredibly useful, streamlined products for end users - more on this in a later section. Regardless of its immediate applicability, there is a war for data occurring in the traditional world of machine learning and decentralized AI is no different.

Platforms for collaboration of AI and Crypto

While still quite early into its lifecycle, decentralized AI is already seeing platforms develop for entire decentralized AI ecosystems and product suites built with the future in mind. OpenAI is the best real world example of a successful AI lab that’s been able to develop additional products on top of brilliant models. In crypto there is still a large gap between innovation and usage, even outside of decentralized AI.

Teams building within the decentralized AI space are taking various approaches to platform building. Some are focusing on consumer platforms that utilize models and appealing UX, others are working on infrastructure for autonomous agents and others are building large networks with subnetworks for other models operating underneath. There isn’t an example of a winning approach or what will work in the long run, so different strategies are unfolding as more developers enter the space.

These will be looked at in the next sections, though we will first make a few distinctions. The idea of decentralized platforms is a little ambiguous - most of these protocols do have bold enough visions that their ideas might be misconstrued as platforms. For the sake of simplicity, we’ll define a decentralized AI platform as any product that’s built to stand on its own with an eventual ecosystem of additional apps built atop it. There are numerous GPU marketplaces being built in the decentralized AI sector, and while technically these are platforms, they are limited in scope and are usually built with different goals than the protocols we will discuss.

A closer look at the decentralized AI ecosystem

With that context, we can take a look at a handful of the most promising protocols across these various subsectors. There are many more that could be analyzed, though we chose a more curated list to try and provide as diverse of an example base as possible. For more information on decentralized AI, there are a few excellent reports written here and here.

Ritual, Ora, Truebit and Modulus

Starting with Ritual, this is a very unique protocol that’s been seeing significant interest from nearly every corner of the crypto industry. Founded by a team of experienced builders in the crypto and AI space, Ritual is envisioned as an open, modular, and sovereign execution layer for AI.

The core of Ritual is Infernet, a decentralized oracle network (DON) optimized for AI that is already live and operational. Infernet allows existing protocols and applications to seamlessly integrate AI models without friction, by exposing powerful interfaces for smart contracts to access these models for inference. This enables a wide range of use cases, from advanced analytics and predictions to generative AI applications running on-chain.

Beyond Infernet, Ritual is also building its own sovereign blockchain with a custom VM to service more advanced AI-native applications. This chain will leverage cutting-edge cryptography like zero-knowledge proofs to ensure scalability and provide strong guarantees around computational integrity, privacy, and censorship resistance - issues that plague the current centralized AI infrastructure. Ritual's grand vision is to become the "schelling point" for AI in the crypto space, allowing every protocol and application to leverage its powerful AI capabilities as a co-processor.

Ora is a crypto project that is pioneering the integration of artificial intelligence directly onto the Ethereum blockchain. Ora's central offering is the Onchain AI Oracle (OAO), which enables developers, protocols, and dApps to leverage powerful AI models like LlaMA2, Grok, and Stable Diffusion right on the Ethereum mainnet.

Ora's innovation lies in its use of optimistic machine learning to create verifiable proofs for complex ML computations that can be feasibly verified on-chain. This unlocks a new frontier of "trustless AI" - AI models and inferences that can be trustlessly integrated into blockchain applications, with robust cryptographic guarantees.Beyond just inference, Ora envisions a wide range of use cases for on-chain AI, from predictive analytics and asset pricing to advanced simulations, credit/risk analysis, and even AI-powered trading bots and governance systems.

The project has also introduced the "opp/ai" framework, which combines the benefits of opML and zero-knowledge ML (zkML) to provide both efficiency and privacy for on-chain AI use cases.Ora is building an open ecosystem around its Onchain AI Oracle, inviting developers to integrate their own AI models and experiment with the myriad possibilities of trustless AI on Ethereum. With its strong technical foundations and ambitious vision, Ora is poised to be a key player in the emerging intersection of crypto and artificial intelligence.

Truebit is a groundbreaking platform that aims to change the way developers build and deploy decentralized applications. Truebit offers a verified computing infrastructure, enabling the creation of certified, interoperable applications that can seamlessly integrate with any data source, interact across multiple blockchains, and execute complex off-chain computations.

The key innovation behind Truebit is its focus on "transparent computation". Rather than relying solely on on-chain execution, Truebit leverages a decentralized network of nodes to perform complex computations off-chain, while providing cryptographic proofs of their correctness. This approach allows developers to unlock the full potential of Web3 by offloading the majority of their application logic to the Truebit platform, without sacrificing the trustless guarantees and auditability that blockchain-based systems provide.